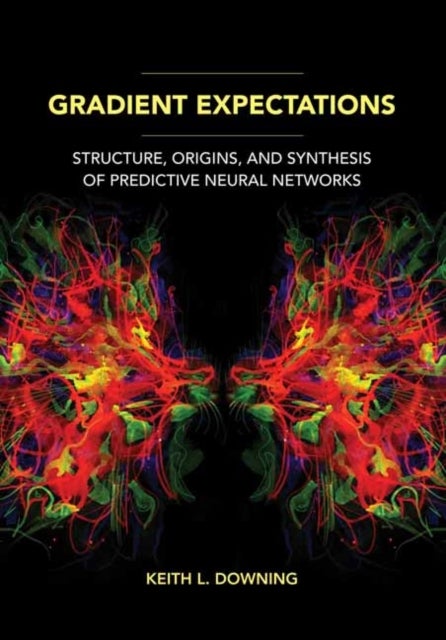

Gradient Expectations av Keith L. Downing

789,-

<b>An insightful investigation into the mechanisms underlying the predictive functions of neural networks—and their ability to chart a new path for AI.</b><br><br>Prediction is a cognitive advantage like few others, inherently linked to our ability to survive and thrive. Our brains are awash in signals that embody prediction. Can we extend this capability more explicitly into synthetic neural networks to improve the function of AI and enhance its place in our world?<b></b><i>Gradient Expectations</i> is a bold effort by Keith L. Downing to map the origins and anatomy of natural and artificial neural networks to explore how, when designed as predictive modules, their components might serve as the basis for the simulated evolution of advanced neural network systems.<br><br>Downing delves into the known neural architecture of the mammalian brain to illuminate the structure of predictive networks and determine more precisely how the ability to predict might have evolved from more pri